As the level of AI usage in global business operations grows, developers and business owners must perform effective check-ups so that all systems work to their fullest potential. As was recently reported, the AI market amounted to around 200 billion U.S. dollars over the course of the last year and is expected to grow well beyond that to over 1.8 trillion U.S. dollars by 2030. In addition, McKinsey reports that in 2024, 72% of respondents reported the spread of AI in 1 or more business functions, 50% in 2 or more functions, 27% in 3 or more functions, 15% in 4 or more functions, and 8% in 5 or more functions, according to the survey findings.

Business owners and software developers tend to get lost in the current variety of testing types, tools, and the degree of involvement of QA engineers in checking AI software. This article is a universal guide aimed at explaining the importance of QA specialists in testing AI solutions. We will cover the importance of AI testing in project development, the subject of AI testing and what’s included in it, and the most efficient methods of Artificial Intelligence testing by QA experts. Read on to find out how to test AI models effectively and in a way that further improves your product.

The role of checks in AI

AI-based apps, tools, and other products are becoming more and more widespread and used by established companies and startups in different niches and of different sizes. At the same time, the scrutiny towards AI also grows, and without proper checking, it becomes impossible to foresee how precise, legal, and secure your AI product will be after its launch.

As mentioned in the Stanford AI Index Report, 2024, there has been a significant rise in AI-related regulations in the United States, with the number growing from a single regulation in 2016 to 25 in 2023. Last year alone, the total number of AI-related regulations increased by 56.3%. Now that the reputation of AI in the world is becoming a substantial concern, building an AI that stays regulatory compliant and efficient at the same time is a primary task of developers not only in the US but all over the world.

The most surefire way to do so is through AI testing, which requires a strong and logical strategy and the skills of certified QA specialists. For AI, a unique testing method is very important for every project, primarily due to such factors as:

Differences in app architecture. When you inquire how to test AI applications, you must understand that they tend to differ widely in their architecture — which means that every separate architecture type requires an individual method of checking its capabilities and safety.

No standard methodology. Unlike conventional software development, which uses standardized testing algorithms and scripts, AI doesn’t have universally accepted testing methods.

Model and data dependency. The behavior of an AI depends on the quality of the information used for training and the featured model. Testing must consider this dependency, evaluating not just the responses but also the information behind them and training processes.

All these factors contribute to choosing the most effective testing approach that will improve your AI-based product or app. Therefore, you must plan out every step of your project before implementing one standard testing procedure.

Definition and methodology of AI checks

What is AI testing? It is a complex evaluation of an AI required to make sure that it works as planned. Testing aligns the AI with ethical standards as well and delivers reliable and unbiased outcomes. Testing can also create the basis for establishing clear AI usage policies for enterprises, as 63% of entrepreneurs say their company does not have an AI usage policy in place (DigitalOcean, 2023). The methods included in AI testing are comprised of:

Behavioral verification. AI must behave as expected in both common and pressure cases. Testing guarantees that the system responds appropriately to covered data, avoids overfitting, and delivers reliable predictions.

Bias identification and mitigation. AI often inherits biases from training data. Testing assists in spotting these biases and changing the product or set of data to get rid of ethical or operational risks.

Data integrity assurance. The quality of information shared with it most often influences the AI’s functions. Testing helps confirm the precision of information and completeness in the entire lifecycle of an AI product or app.

Adaptability validation. Unlike static software, AI needs to adapt to new and existing inputs continuously. Testing checks the system’s ability to maintain good results with time as it encounters new information and requests.

Adherence to the laws and regulations. Making sure that your product is compliant with international laws and regulations is an integral part of AI testing, as the legality of AI is one of the primary regulatory concerns, especially in sectors that deal with sensitive information, such as finance or healthcare.

All in all, AI testing always requires specific strategies in place to address the possible issues created by its inherent dynamism. In the near future, a higher percentage of organizations (39%) anticipate that AI will cause an increase in their workforce size, while a smaller proportion (22%) expect it to result in a reduction in headcount. This trend likely stems from the growing requirement for AI experts as the number of AI functions and testing methods keeps expanding, requiring more and more new skills and qualifications.

Key differences in AI tests

As a rule, AI checking performed by QA specialists is a two-faced coin. On the one hand, it is a long and continuous process that starts from the moment the first variant of an AI project is born and continues well beyond the point at which the AI is already live and usable. On the other hand, testing an AI affirms the quality of data. Here’s more in detail about these two factors.

AI system tests are continuous

The adaptiveness of AI as its main characteristic asks for continuous retraining and testing to maintain and improve performance. Here’s why:

Preventing AI degradation. With time, Artificial Intelligence can experience degradation due to variable data patterns or declining accuracy of predictions. Continuous retraining helps your AI stay relevant and accurate in real-world scenarios.

Better adaptation to new information and inputs. AI exists and works in circumstances where new patterns and data types frequently emerge. Continuous testing checks the model’s opportunity to adjust to these changes for sustained reliability and accuracy.

Keeping software aligned. As models evolve through retraining, the associated software infrastructure may also need adjustments. Checks can guarantee that the updated model combines smoothly with the existing application and works as predicted by the developers.

The Digital Ocean survey 2023 reports that 73% of respondents are currently trusting artificial intelligence for personal and/or business use. In order to set out a stable functionality of an AI product, testing should be one of the primary priorities.

Regular tests improve data collection for AI

Another reason why constant AI testing is required for every project is effective data gathering. It is evident that an AI application must be trained to fetch exactly the data that is required by the user's request. Even more than that, any artificial intelligence app must be able to ensure that the information it fetches is exact and does not provide false, misleading, or fraudulent statements. The key goals that drive the efficiency of regular AI checks include:

Quality assurance. Testing can find errors and mistakes in the information used for building an AI product. The data integrity improves model functionality and gets rid of the risk of wrong outputs.

AI precision. Continuous testing can guarantee that AI predictions meet the standards of accuracy in different situations. It checks not only the correctness of predictions but also their relevance. Thus, checks will let you see exactly how your AI fetches the information you ask for, where it fetches it from, and why it chooses this source of information over the others.

Reliability of responses. For AI chatbots, testing is required to put in place consistent, suitable, and human-like responses. It identifies scenarios where the bot may fail or share irrelevant information, enabling targeted improvements.

Model resilience. Tests can set out the resilience of AI to wrong inputs, noise, or overload cases. Sophisticated models tend to deal with any kind of pressure without showing any errors in functionality. However, in order to be this resilient, an app should be first built this way, and to be built this way, after all, it must be continuously tested.

As a result, continuous testing helps AI remain effective, adaptable, and aligned with real-world requirements. The checking process is all about creating different circumstances and seeing how quickly and effectively the AI reacts to them. At the same time, it drives improvements in both the quality of responses and the trustability of an AI product.

AI application checks in detail

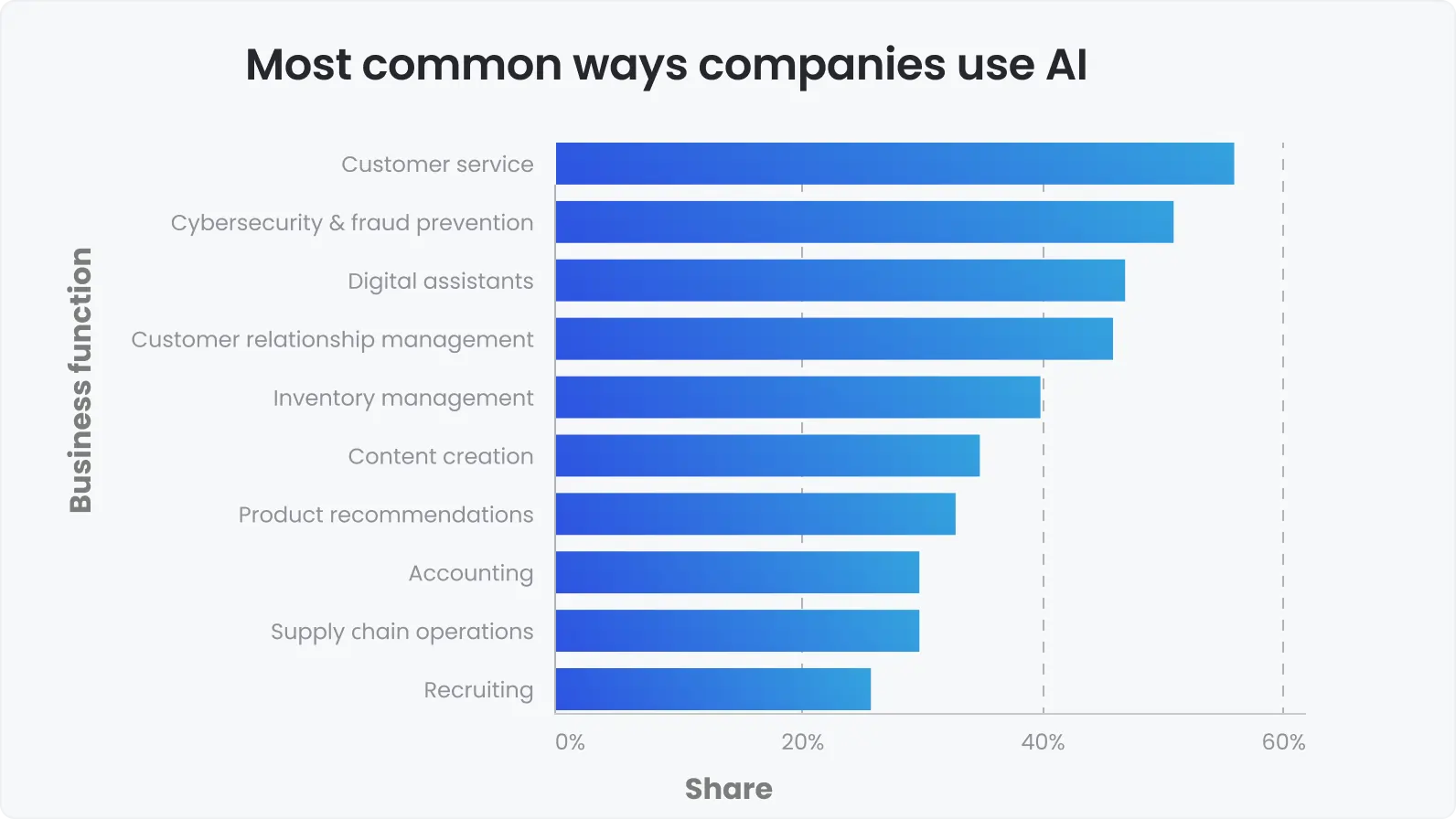

As reported by McKinsey, 72% of organizations have adopted AI in at least one business function. Successful adoption of AI systems, among other things, from efficient and detail-focused testing. Therefore, it is important to break the procedure into steps before implementing a working strategy for your own project.

Testing AI applications requires specialized, continuous processes to face the issues of dynamic models and complex data. It can guarantee the accuracy of an AI and check its reactions in different situations for reliability and precision. Once you are sure that your AI app reacts adequately and provides sufficient responses regardless of the complexity of the request and the overall workload, you can be at ease about pushing your product live.

Integration testing evaluates how well AI interacts with other software, while real-world testing simulates actual conditions. Continuous monitoring and retraining can demonstrate that AI behaves as predicted in the middle of rapidly changing data and user needs. Security and privacy testing safeguard against weak spots and thus help your AI stay accurate, ethical, and effective regardless of the environment.

Essential aspects of AI checks

Testing AI systems is needed to create AIs that are exact and trustworthy in real-world scenarios. Unlike traditional software, AI tends to depend on algorithms, making their behavior dynamic and subject to change. A qualitative testing process focuses on validating the information and the algorithm to detect and solve potential issues that could undermine the system's effectiveness or ethical integrity.

Data validation

The first aspect of an AI test is data validation, which is basically checks the accuracy of all the information that’s been input into an AI during the training stage. The verification of information used for AI testing helps to solve the following objectives:

Checking the integrity, quality, and relevance of information that is used for training of an AI product.

Detecting and fixing errors, inconsistencies, biases, and redundancies in sets of information.

Demonstrating that new data meets the model’s requirements, while the functionality remains on point.

Validating preprocessing pipelines to confirm that data transformations maintain accuracy.

Data validation is crucial as AI-based products and apps must be, above anything else, precise and reliable. As AIs are largely used exactly for data collection and processing, the validation process is the best way to establish and check the effectiveness of an AI.

Core algorithm

Apart from the precision of the data input in an AI, it is important to verify how it reacts, behaves with, and responds to different requests and inquiries. Along with data validation, the core algorithm is an aspect of testing that helps check the accuracy of your AI product. AI contributes to the testing process by:

Assessing the AI model’s foundational algorithm for accuracy and adaptability.

Testing for crucial performance metrics to ensure the outputs yield the expected results.

Checking the algorithm’s ability to improve with new patterns.

Conducting tests to confirm the algorithm performs optimally under increased workloads or larger data sets.

Testing focuses on all these aspects and thus makes AI reliable and fair. At the same time, it helps it better deal with possible errors and mistakes in its main functions. Therefore, you must consider both data validation and core algorithm tests as the primary steps for the final improvement of your AI application while preparing it for launch.

Key strategies for AI-based product tests

According to DigitalOcean, 45% of respondents believe that AI-based tools make their job easier. In order to launch a successful and long-lasting AI-based product, any company must regard the testing process with diligence and focus on detail.

But how to test Artificial Intelligence in the most effective way? There are various AI testing strategies that fit different projects and objectives. Among them are functional, usability, integration, performance, API, and security tests.

Functional tests

Functional testing checks that AI product’s key capabilities work as intended regardless of the conditions. Take an AI for image recognition: it must correctly classify different people and things in photographs or images and do so without errors. Functional testing uses different types of data to check precision and evaluate generalization.

This type of testing is a good fit for applications like scam detection systems, where it’s important to catch errors on time, or in maintenance systems, where predictions about the malfunctioning or errors in equipment need to be precise. Whenever you need your AI to provide accurate and to-the-point information about a certain object or a piece of information, a functional test is a must in the implementation process.

Usability tests

Usability testing focuses on how effectively customers use the AI product. It demonstrates that the application is easy to navigate and works as users expect it to. In an AI-powered chatbot, for instance, usability testing would evaluate whether the bot provides clear responses, understands user requests, and maintains a natural, human-like conversation.

This method is especially valuable for customer-facing apps like AI-based assistants, e-commerce preference evaluation programs, or medical apps that guide patients through medical inquiries. Feedback from users helps refine interaction design and improve the overall impression of the AI. This is why it is important to integrate feedback windows into the AI app, allowing users to leave their impressions and comment on the room for improvement as they use the product.

Integration tests

Integration testing can demonstrate the way an AI product works together with other components of the system. For example, an AI-powered personal finance app integrates data collected from banks and offers important informational updates to users. Testing checks that the data is transferred from APIs to the AI and then to the user interface without interruptions.

It also checks compatibility with other products and systems, such as devices used in smart home applications or payment systems in e-commerce platforms. This testing is a good fit for applications with different connected elements, autonomous vehicles, or apps from the sector of logistics. Experience shows that AIs are often used in tandem with APIs, especially in big companies in corporations: therefore, their collaboration must be harmonious at all times, and checking integration is the best way to ensure that.

Performance tests

Performance testing can check the reaction of an AI to different degrees of workload regardless of the pressure put on it. For instance, a real-time traffic prediction system must process different loads of data fast and without lagging. This testing assesses the system’s speed, stress response, and resource usage, ensuring it responds adequately under stress.

It’s particularly important for AIs with strict functionality requirements, such as money trading platforms that follow the current trends in the market in real-time or medical systems that assist in critical diagnoses where delays can have severe consequences. For these kinds of apps, it is crucial not only to be precise but fast, providing actual information as it is required by the customer.

API tests

API testing checks the endpoints that connect the AI and different types of software or apps. It can demonstrate that APIs provide responses accurately. For example, a weather forecasting AI may link with an API to find and select real-time meteorological data. In the same way, an AI app that fetches travel information for customers can connect with platforms like Skyscanner or Booking via their APIs to list relevant flight dates, times, prices, and available accommodation options.

Testing helps to make sure that these APIs return data correctly, deal with errors, and preserve security during data exchanges. This strategy is ideal for AI products that interact with different sources of information, like news aggregation tools or logistics tracking systems. When you are planning to use your AI closely with API tools, it is important to conduct primary testing on how well they work in tandem before launching the product.

Security tests

Security testing identifies vulnerabilities that could expose the AI to security breach risks. For example, a face recognition system used for authentication must resist spoofing attempts (e.g., using photos or videos to bypass the system). The same type of test is important for government-driven apps that contain the private information of citizens, as well as bank and other finance-related applications.

This testing also helps AI meet data privacy requirements, which is especially important for applications handling sensitive data, like AI-driven medical platforms or financial applications. Besides, it evaluates the system’s reactions to malicious data and information, which makes it suitable for high-stakes domains such as cybersecurity or fraud prevention.

Conclusion

The testing process for AI systems should be tailored to the specific architecture, functionalities, and objectives of each product. In reality, 85% of AI projects fail production due to challenges such as inadequate integration, data complications, and insufficient testing. AI testing encompasses more than simply verifying operational functionality, it’s crucial to ensure the system is performant, accurate, secure, and capable of seamless integration with other applications. For example, AI tools like chatbots need to work effectively with CRM systems to provide quality support. A Zendesk study found that 67% of customers favor AI-driven self-service options.

As you can see it is crucial to ensure that your AI software runs smoothly, and this is why you could use expert consultation with a tech specialist before putting your new AI product to the test. DeviQA is ready to provide all the required assistance and navigate you around the essential steps of the AI testing process for your particular project.