12 minutes to read

Improve user confidence in AI with QA testing

Mykhailo Ralduhin

Senior QA Engineer

61% of people are skeptical of fully trusting artificial intelligence. And the level of trust directly influences the success of an AI-powered product. How to enhance user confidence in AI? Find out actionable strategies and best practices!

Why is it so important for companies to build confidence in AI: 3 main reasons

Building trust in AI is vital for all companies that develop applications based on this technology, as well as for businesses that want to optimize their processes with it. Companies should care about increasing trust in artificial intelligence, as their success directly depends on it. The level of confidence in AI positively affects:

The success of the app. The more the target audience trusts it, the more actively they will purchase and use it.

The level of customer satisfaction. If users trust the software, they will be less biased in evaluating interactions with it. Also, a greater level of confidence allows them to use all the application's features without limits, thereby experiencing and appreciating its full functionality.

The quality and level of AI implementation in workflows. The more employees trust the technology, the more they use it to improve the speed and efficiency of their work.

User confidence in AI: Benefits and risks with real-life examples

Trust consists of a proper assessment of the benefits and risks. If the advantages outweigh the potential threats, users’ trust will be higher. Next, let's look at what benefits artificial intelligence brings to life and work, as well as what AI risks are the most common and how to minimize them.

The right use of artificial intelligence can make life and work easier. Here are some of the most essential benefits of AI-powered applications from the users’ point of view with examples from real life.

Benefits of using AI

Personalized recommendations

Thanks to “smart” algorithms, modern applications can make the user experience as customized as possible. For example:

ML/AI algorithms in shopping apps provide customized recommendations to make finding the right and interesting products easier and quicker. This helps to boost sales and increase revenues.

AI-based healthcare applications can make the right diagnosis and select the appropriate treatment and lifestyle advice, depending on the individual characteristics of the patient.

Educational apps create customized learning plans, taking into account the user’s background, current level of knowledge, and goals. With this, you can increase the time users spend in the app, thus showing it more ads and increasing your profits.

Job search applications can provide personalized job opportunity compilations based on the solicitante’s education, experience, and skills. By improving the job search process, you can attract more job seekers and employers to the product and increase the profit from your app or service.

Deliver exceptional user experiences with our AI testing services

Advanced security

AI algorithms can aid in fraud detection. Many banks and other fintech businesses use technologies that detect atypical behavior or suspicious transactions, identifying and preventing fraudulent or illegal activities.

Enhanced decision-making

AI-based applications help make decisions in everyday life easier and more efficient. For every area of life, there are plenty of AI-based apps that can help make important decisions, for example:

Fitness applications assist in building a proper training program.

Weather forecast apps help to decide what to wear to feel comfortable.

Calorie-counting solutions help to choose the right nutrition plan.

Budget-tracking applications assist in managing finance, and so on.

All these apps' creators can earn a good income from them. The more useful the product is, the more people use it, and the more income can be generated from the sale of the product and the advertisements shown in it.

Work facilitation

38% of people state they use some form of AI daily. The solutions based on artificial intelligence help make their work easier and faster by automating some routine tasks.

Risks of artificial intelligence from the users’ point of view

The use of various AI-driven applications brings many benefits to the users’ lives. However, some risks can be associated with the use of artificial intelligence. Here are some of the most widespread and fearsome risks of AI usage.

AI security risks

The use of AI-based applications is often associated with sharing personal information. For example, to use HR solutions, it is sometimes necessary to provide confidential data about the company (for employers) or private individual details (for candidates). The use of healthcare apps requires private data and details about the health state. Financial solutions collect and store personal data, transaction history, and other sensitive information.

This creates AI cybersecurity risks. In case of hacker attacks or various leaks, private data may end up in the hands of malicious users. That is why users meticulously select AI-based applications, paying great attention to a high level of security. So, software creators should rigorously test their products to eliminate possible vulnerabilities and ensure the safety of user information.

Risks associated with AI bias

AI systems may have biases that lead to:

incorrect answers;

inappropriate performance results;

problems with regulatory compliance.

An illustrative example of the problems associated with bias is the experience of US hospitals that used AI systems to assess health risks. The incorrect operation of the AI model led to discrimination against patients of color. The data used for system training was mainly based on the medical records of white patients. This created the imbalance that caused false results that rated colored people’s risks lower than they should have been.

Inappropriate outputs and other misbehavior of AI

Many users are skeptical about trusting AI because they fear receiving misleading outputs from it. There is indeed such a risk due to training data imbalance. If the AI model has been trained on too monotonous or unreliable information or not tested properly, it may produce incorrect or harmful responses.

Current level of user trust in AI: Facts and statistics

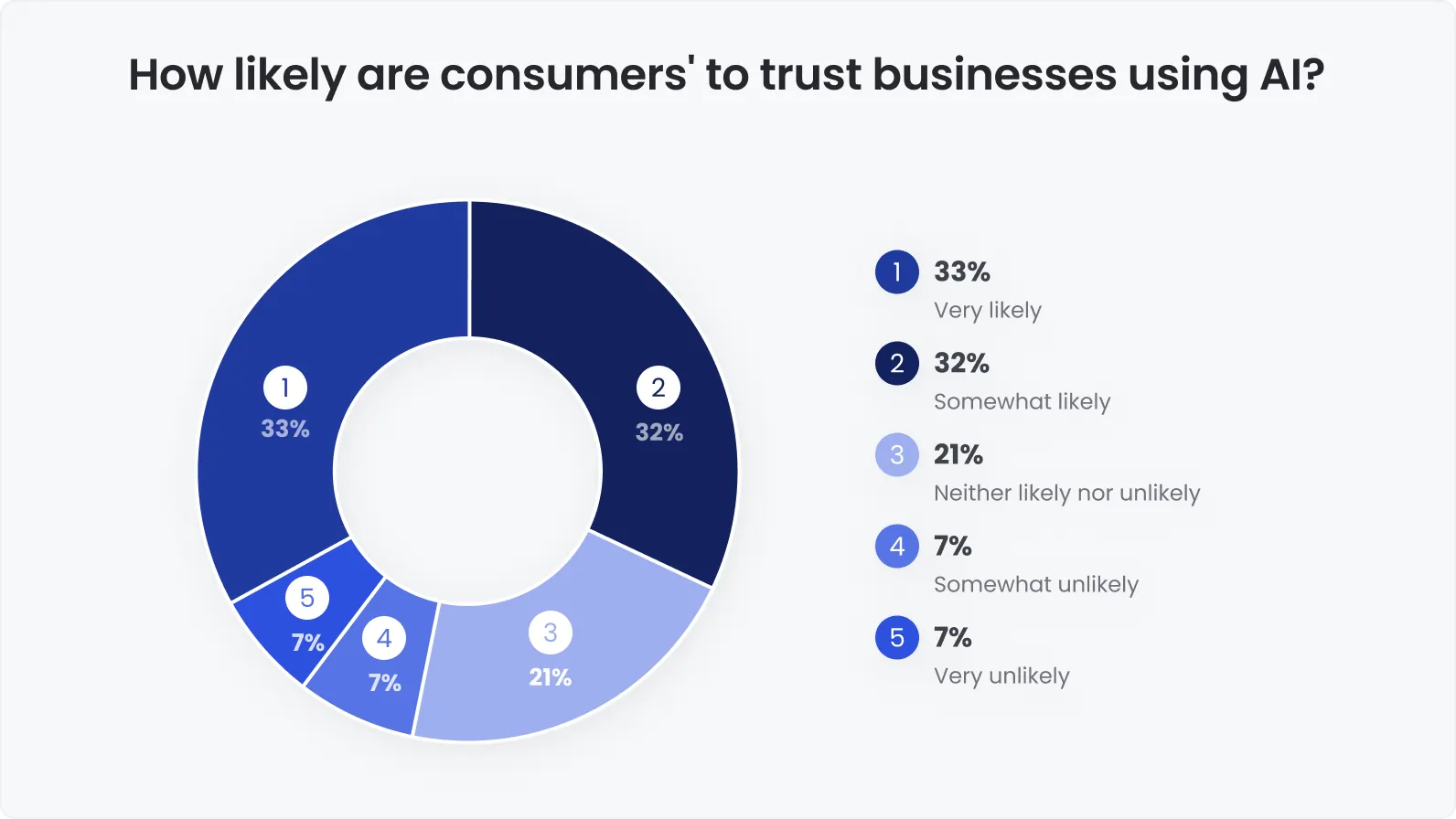

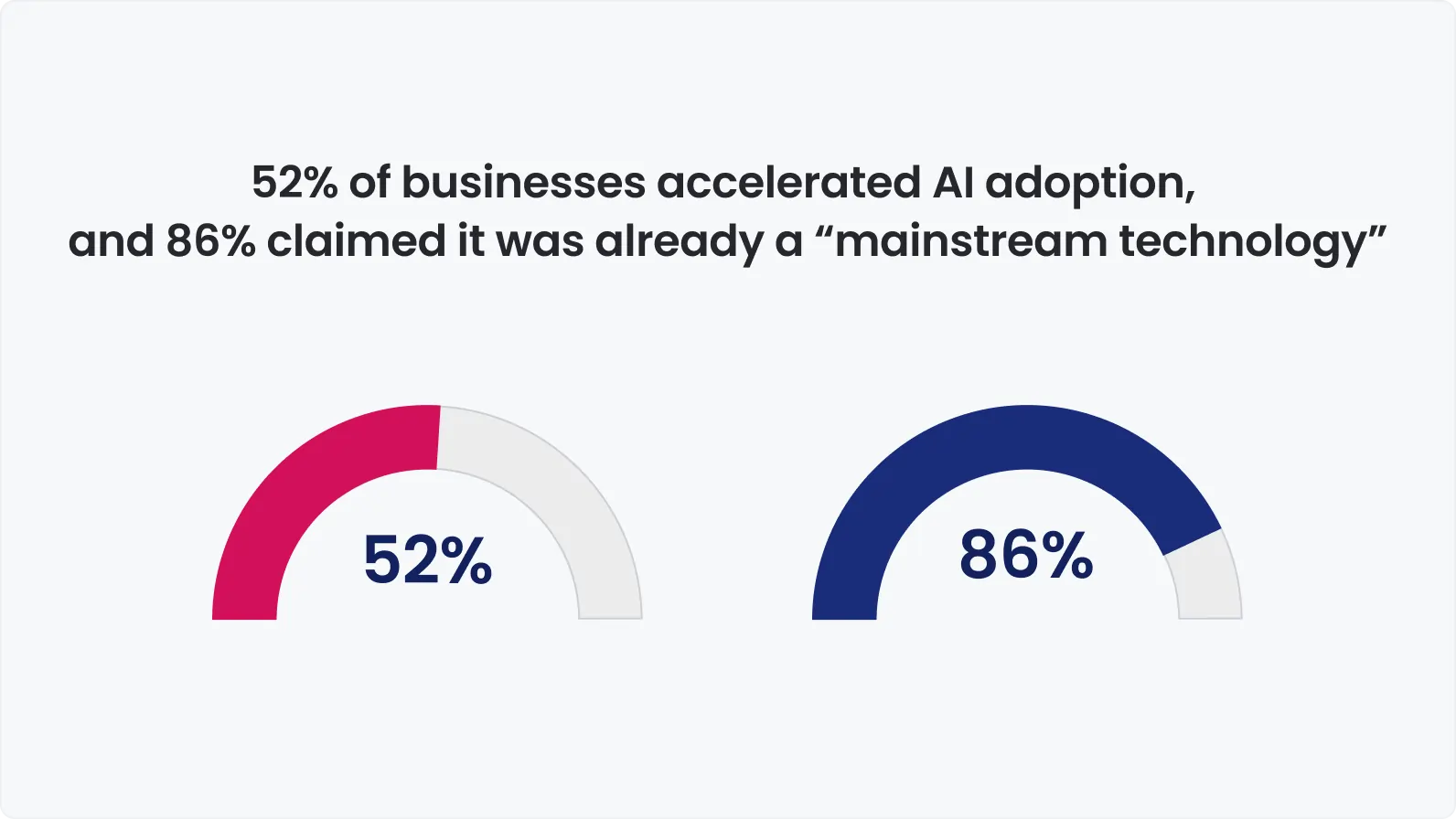

According to the latest research:

61% of respondents are afraid to fully trust AI systems.

67% of interviewed people report that their level of trust in artificial intelligence ranges from low to medium.

Medical AI systems are the most trusted.

HR AI applications have the lowest level of trust.

84% of people consider cybersecurity issues to be the greatest risk when actively using AI.

50% of people believe that the benefits of using AI-based solutions outweigh the risks.

To increase the target audience’s trust in artificial intelligence, it is necessary to constantly work on the quality and level of security of the software. And this cannot be done without thorough AI testing services.

How can QA help companies improve confidence in AI?

Flaws in AI systems’ functioning can lead to numerous undesirable consequences: starting with leakage of users' personal data to malefactors and ending with serious problems related to incorrect or harmful outputs.

This is especially relevant for solutions in such critical niches as healthcare or finance. For example, incorrect work of fintech applications can lead to financial ruin or failure in fraud detection. Misdiagnoses or harmful advice from medical AI systems can cause complications or even death of patients in the long run. To avoid such catastrophic outcomes, rigorous artificial intelligence testing is necessary.

Misbehavior of AI may be harmful not only to its users but also to its creators. One of the real-life examples happened to the Chevrolet company. Its customer service chatbot was influenced by a dishonest client who made it act abnormally. Due to a glitch, the bot sold the car for $1 and made this a legally binding offer. Had the application been thoroughly tested using the adversarial technique, such a vulnerability could have been identified and addressed, helping to avoid problems and losses.

To avoid ethical problems from harmful advice to users or losses due to misbehavior, it is crucial to know how to test AI models effectively. The adversarial technique is especially effective in this case. This is a method within which QA specialists intentionally provoke an AI model for unacceptable behavior with “bad” requests.

Another unpleasant story happened with the educational company called iTutor Group Inc. It used an AI-powered HR system that automatically rejected senior applicants at the age of over 60 due to bias. This has led to legal issues due to the failure to observe anti-agism rules. Quality QA could help identify bias and eliminate it by making training data sets more diverse. This would help the company avoid legal problems.

To avoid these kinds of problems described above, AI testing for the presence of bias is compulsory. If it has been detected to some extent, you should make every effort to eliminate it.

AI confidence implementation recommendations

The target audience’s trust in AI is a crucial point in the success of products based on this technology. The more confident people are in artificial intelligence, the more popular the application will be and the more success a business can achieve with it. Therefore, companies should take care of increasing the potential users’ trust in AI. To instill more confidence in your AI product, you need to take care of all aspects of its quality, such as:

usability;

security;

ethical compliance;

high-quality data for AI training;

accuracy, consistency, and truthfulness of answers;

the absence of bias

regulatory compliance.

To control and improve all these characteristics, thorough quality assurance is needed. Experienced QA engineers know that they should consider all sides of the AI application to comprehensively verify its high quality. The most essential aspects requiring special attention are the following three.

Data

It is imperative that experts carefully monitor the quality of the data used for AI training. This directly affects the work of AI systems. Poor-quality information can lead to irrelevant or misleading outputs, as well as non-compliance with ethical considerations and applicable laws. To avoid these issues, data sets should be:

Accurate. They should contain only reliable facts. Inaccurate information or “fluff” that doesn't have important meaning can lower the AI training quality.

Complete. The data sets should be comprehensive so that AI can have all the necessary information to be trained to perform its functions.

Relevant. The data shouldn’t be out of date. This is especially true for niches that are rapidly developing and frequently updated. For example, an application in the legal field should be trained on information that contains all current law versions and does not contain outdated ones.

Structured. The data should be well-organized and properly labeled so that artificial intelligence can “learn” more effectively.

Diverse. Data sets should contain information about different age, gender, and ethnicity groups to avoid bias.

QA professionals carefully monitor all the above-mentioned data quality aspects. This will help to make the AI solution even more high-quality, robust, and adaptive to further improvements.

Ethics

AI applications are subject to ethical considerations. If an AI model doesn't comply with them, the reputation of the company that developed it can be seriously damaged. To prevent this, the AI system should prioritize:

Responsibility. App creators should realize that they are responsible for the consequences of AI responses to users. This is especially important for solutions in critical niches, such as healthcare.

Fairness. AI-based applications should be able to produce honest, unbiased, and non-discriminatory outputs, equally useful to all population groups.

Transparency. The AI-powered apps’ decision-making algorithms should be clear and understandable. If a company honestly shares the principles of its solutions’ operation, it significantly increases the level of trust on the part of current and potential users.

Human well-being. AI solution’s responses should prioritize the users’ health and comfort. They should not produce harmful outputs.

To ensure that all these requirements are met, QA specialists use all possible methods, such as algorithmic verification, ethical AI evaluation, or adversarial testing.

Controls

It is vital to make sure of the AI model’s business efficiency and make it impossible to misuse it (for example, like in the Chevrolet story). To do this, QA engineers carefully test various angles of its operation, such as:

Reliability and sustainability. The system should be free of serious vulnerabilities that may give space for its misuse.

Regulatory compliance. The solution must comply with all current rules and laws related to AI or personal data storage so that creators don't get any legal issues.

Performance. It is essential to make sure that the application works as expected under different conditions.

Experienced QA experts who know how to test AI applications use a wide range of techniques including performance and attack testing to evaluate all these points.

Conclusion

At this point, the level of people's confidence in AI is relatively low, which can be a barrier to its usage. However, the success of a product based on artificial intelligence directly depends on the target audience’s trust. To make your solution more trustworthy in the eyes of your potential customers, it is essential to maximize its quality. This includes such characteristics as:

Security to keep users’ data safe and sound.

High-quality training data sets to avoid harmful or irrelevant outputs.

The absence of bias that can lead to discrimination and incorrect operation outcomes.

Transparency and clearness of AI model’s decision-making principles.

Meeting ethical standards and legal regulations.

To control all these aspects, thorough QA is necessary. It is crucial to choose QA engineers who know how to test AI and have solid experience in this.

Are you struggling to enhance user trust in your AI application? Contact the DeviQA experts for rigorous AI quality assurance!

Team up with an award-winning software QA and testing company

Trusted by 300+ clients worldwide