Artificial intelligence is a modern-day hit in the technology world. It is infiltrating every area of business and is actively used across all industries. The global AI market revenue is estimated at over 196 billion dollars as of 2023 and is expected to reach 1800 billion dollars by 2030.

There are already a myriad of AI-based applications in the world, and more and more of them are appearing. However, not all of them become successful. And many fail because of insufficient quality. That is why the ai-based testing services is crucial. Testing this type of software has its unique peculiarities and difficulties. What are they? Learn from this article!

What is the role of QA for AI systems?

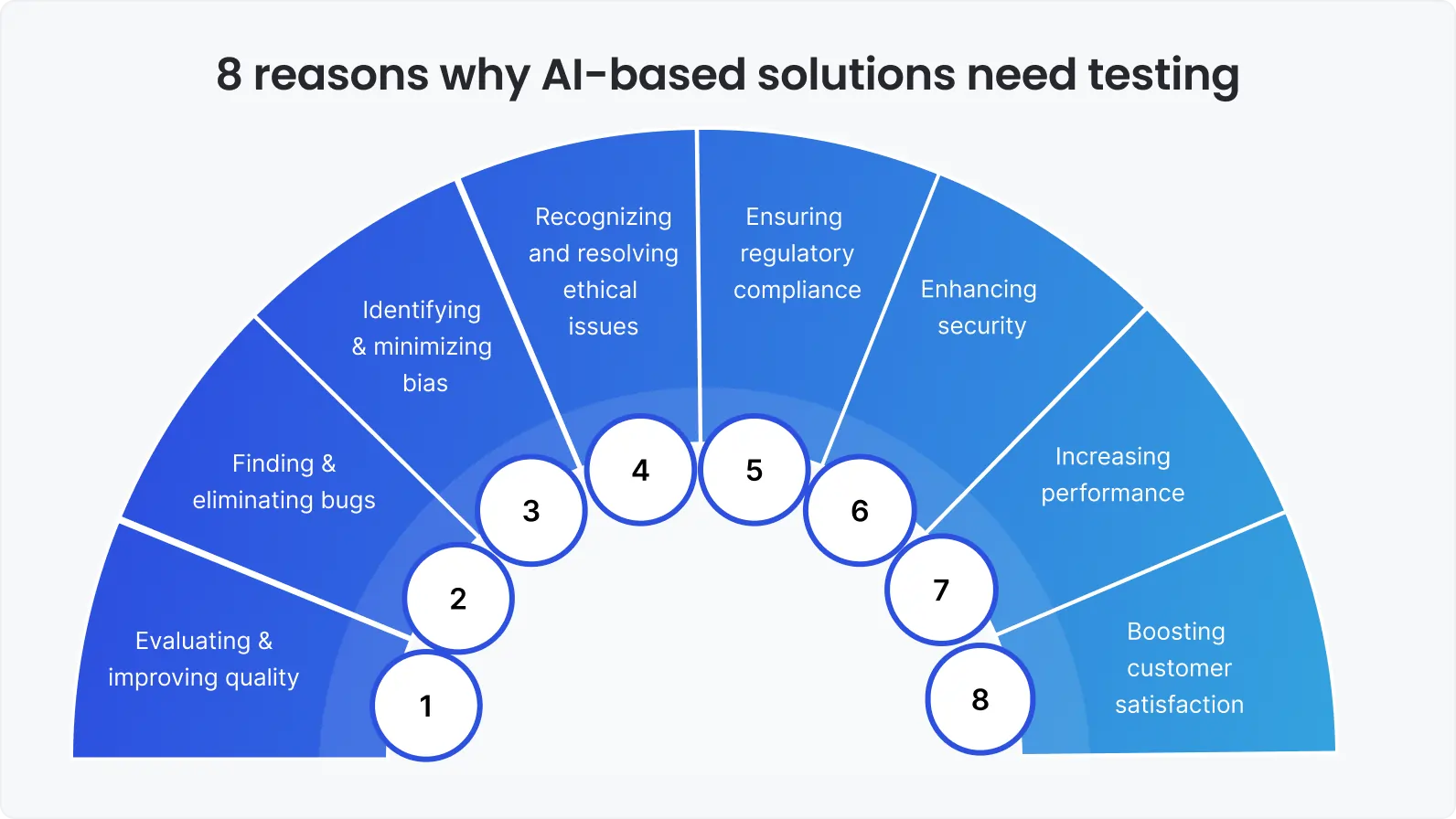

During testing, experts examine the AI model and its pattern of operating under different conditions. This helps to:

Evaluate and improve the quality. Understanding the overall level of quality allows businesses to determine the future development strategy for their projects.

Find and eliminate flaws. During testing, engineers identify errors, inaccuracies, and illogicalities in operation. This makes it possible to understand what needs to be fixed for a more correct and efficient operation of the application.

Identify and minimize bias. This is a very common problem in AI applications, especially in NPL models. It is very important to detect and eliminate it for the efficient and problem-free operation of the software.

Recognize and get rid of ethical issues. Problems of this type can be a stumbling block to the success of a product. Their presence can lead to a significant decrease in customer satisfaction and even provoke some legal troubles. Therefore, it is very important to identify and eliminate them early on.

Ensure regulatory compliance. In each area, there are regulations, for example, concerning the collection, storage, and use of data. It is vital to comply with them to avoid problems with the law.

Enhance security. For most users, this criterion may be one of the most critical, as often the use of AI-based applications involves the transmission of sensitive data. Testing helps identify gaps in protective measures and vulnerabilities.

Increase performance. Imperfections in the code can limit the productivity of the application. Testers can check how it performs under a certain load. This can help to identify problems and get rid of them. As a result, the product will be able to work even more efficiently under higher loads.

Boost customer satisfaction. The presence of bugs and defects in operation can significantly worsen the user experience. After getting rid of them, the application can become faster, safer, more convenient, and more productive to make customers happier.

Why is it important to use unique methods to test AI models?

AI-based applications differ significantly from classic ones. Their biggest and most important distinguishing characteristics are:

Intricate algorithms. This type of software operates according to complex mechanisms that involve the ability to “learn”, “decision-making” based on the information received, the autonomous performance of certain tasks, “prediction” based on the history of previous events or data learned, and so on.

Ongoing learning ability. For AI-driven solutions to work effectively, they must constantly replenish their “knowledge base”. This leads to development and metamorphosis in the processes of functioning.

High changeability. This software has very dynamic operating algorithms that improve constantly and may also be sensitive to changes in input data. As these systems are “learning” all the time, the outcomes of their work may get modified over time or vary from case to case.

Due to these peculiarities, classical methods and approaches to testing do not work for AI-based software. QA for AI should be dynamic, flexible, and adaptive, just like these systems. Are there any specific methods to test AI? In quality assurance, there are no strict rules, special methodology, or established generally accepted techniques for this. Professionals usually choose certain strategies and methods individually in every specific case.

How to test AI applications: Key peculiarities and strategies

The basic principle is building a flexible and continuous QA process. It is crucial to test AI-based software not only before its release but also right while they are being developed. An ongoing quality assurance process will maximize the quality of the result. With this approach, testers identify problem areas all the time, and developers can remove or add sections of code to make the application work exactly as expected.

What are some specific strategies that can be used for AI model testing? Further in the article, you can find some examples.

Testing the algorithms

This is checking the very algorithms of the application for correctness and identifying bugs in it for further elimination. This strategy is suitable for any AI-powered model.

Integration testing may also be performed. It checks the consistency and correctness of various components of algorithms working in conjunction with each other. This helps to identify possible problems that may arise when different elements interact with each other.

Another way to check the algorithmic accuracy is the F-score. This is measuring the performance of predictive models.

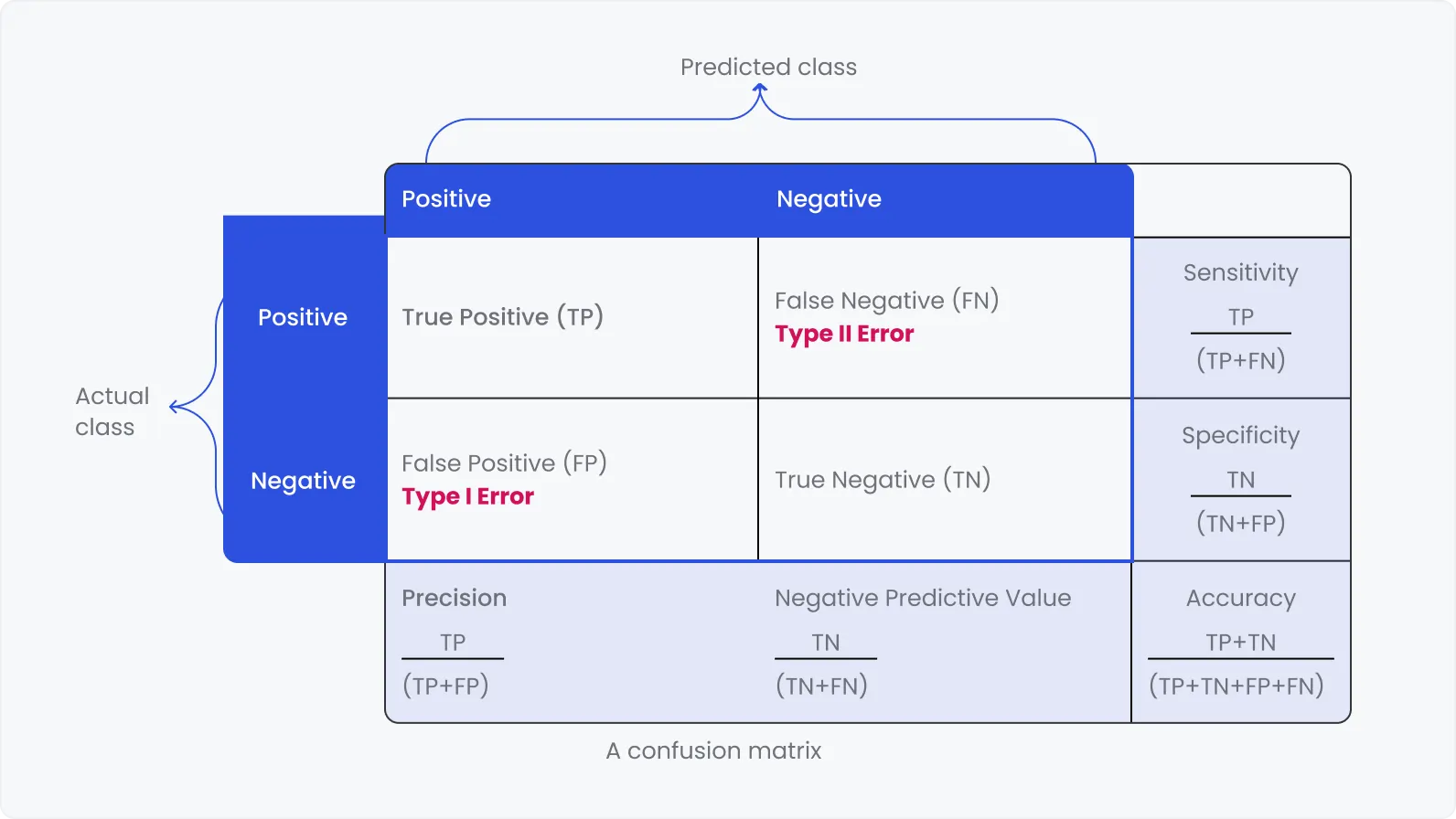

Additionally, a confusion matrix can be used in evaluating the quality of algorithms. This is a table that depicts graphically the performance of machine learning algorithms. It helps to understand whether the system confuses predicted and actual classes.

Data creation and curation

Within this process, specialists manage the information used for AI model training and control its quality. This is crucial because the data set that is used in the training process will determine the future behavior of the application in its future work. This strategy may include:

Collecting industry-related information and verifying it against domain standards.

Distribution of data into clusters depending on the context and checking the correctness of this sorting.

Identifying and removing sample data sets.

Deletion of meaningless sections in the information array.

Adding labels to provide the right context for the AI system learning.

Real-life testing

This is checking how the system works under conditions that are close to real-life ones. QA engineers choose the specific environment and methods individually for every particular case. This strategy can include many different techniques, such as:

Test triangle. It is a comprehensive application quality assessment strategy that consists of unit, service, and UI testing.

Deployment testing. This is the check of the performance of the application in an environment close to production conditions. It helps to determine whether the code is ready for deployment by determining whether any errors or other problems are likely to occur during this process.

White box testing. This is a method of quality assessment where engineers are aware of the principles of the application operation and use them to develop test cases.

Black box testing. It's a kind of “blind test”. In this methodology, engineers only know what the result of the algorithms should be, but not how exactly they should perform the given functions.

Backtesting. It is an assessment of the quality of how the model makes predictions based on the information it receives.

Decision analysis. It is checking if the “decisions” the AI system makes are logical and correct.

Non-functional testing. This is an evaluation of software’s properties, such as stability, performance, efficiency, usability, and others, rather than features.

Check for human bias. This is testing to ensure that the AI model does not have cognitive bias typical for people.

Smart interaction testing

This is a strategy for ensuring the quality of work of devices that run on AI-based software. These are, for instance:

smart assistants (such as Google Home);

robotic arms;

drones;

self-driving vehicles, etc.

The kinds of AI that can be tested

Quality assurance is important for all AI-based models and systems, such as:

Machine learning. Algorithms that can “learn” by “memorizing” and generalizing information.

Deep learning. A kind of AI model that “learns” according to principles that resemble the concept of how the human brain works.

Expert systems. Software that can “make decisions” based on “learned” information.

Natural language processing. These are models that process information based on patterns between the components of speech (letters, words, sentences, etc.).

Computer vision. These are algorithms that allow devices to identify and classify objects from graphical sources.

Image processing. These are models that analyze images by perceiving them as 2D signals and process them according to predefined algorithms.

Robotic process automation. This is the software that can automatically do routine work, such as copying, filing, transferring data, etc.

Each type of AI-powered solution needs a unique testing strategy. The specific set of techniques and methods is always selected on an individual basis. For example:

NLP models need especially careful testing for bias and ethical issues.

ML and deep learning systems should be thoroughly tested for correct learning and generalization of information.

Robotic process automation tools need rigorous checks for the correct execution of programmed functions.

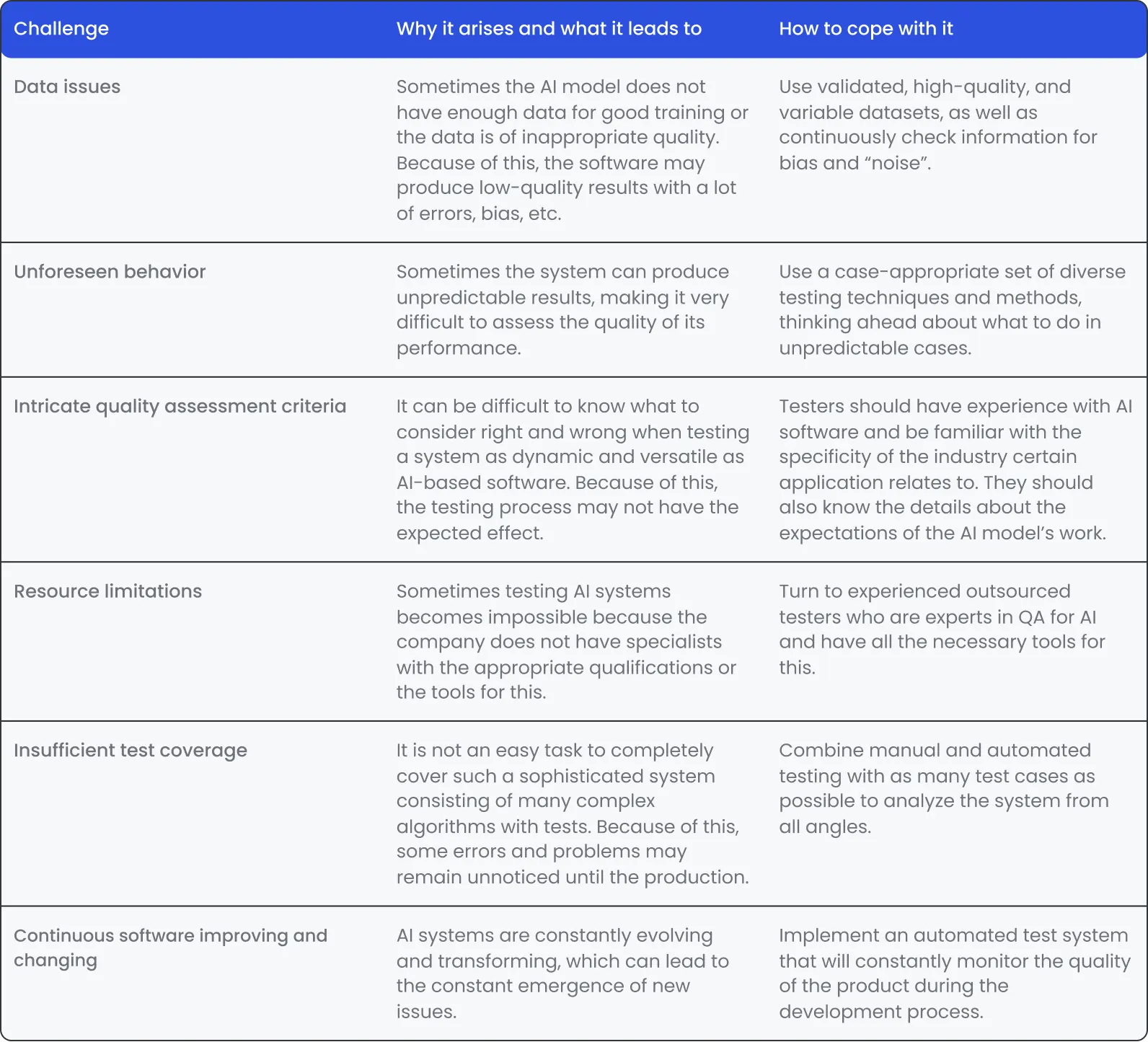

The 6 most common challenges of testing AI systems and how to overcome them

Checking the quality of AI-driven software has many more complexities than testing regular applications. In the table below, let's look at the most widespread challenges and how to cope with them.

6 most common challenges and how to deal with them

To sum up

Testing AI-driven software is not an easy task with many more difficulties than testing the quality of usual applications. Many companies face such challenges as:

Information-related problems, e.g. low-quality data with “noise” and bias.

Unpredictable application behavior during testing.

Difficulties in defining quality assessment criteria.

Lack of resources, such as necessary tools, qualified testers, etc.

Inadequate test coverage due to the complexity of the system.

Constant arising of new defects due to continuous updating and improvement of software.

To overcome the above problems, it is better to resort to highly qualified QA experts who have extensive experience in testing AI models. The DeviQA team develops the most appropriate strategy and selects suitable testing methods, taking into account the type and features of particular software to overcome challenges in testing AI in any industry.